How Security Teams Protect Machine Learning Models from Manipulation

Introduction to Machine Learning Model Security

Machine learning models are widely used across industries, powering decision-making and automating tasks. However, these models are vulnerable to attackers seeking to exploit weaknesses for malicious purposes. Protecting machine learning systems from such threats is now a critical responsibility for security teams.

As machine learning becomes more integrated into healthcare, finance, and government, the consequences of model manipulation grow more serious. Security professionals must stay ahead of attackers by understanding new threats and developing effective defenses. This article explores the main risks facing machine learning models and the strategies that security teams use to protect them.

Understanding Threats to Machine Learning Models

Adversaries often target machine learning models through methods like data poisoning, model inversion, and adversarial attacks. These tactics can degrade a model’s accuracy or even cause it to make harmful decisions. To address these challenges, security teams focus on AI security to prevent adversarial machine learning, including techniques to detect and block manipulation attempts. Data poisoning is a particularly dangerous tactic, as it corrupts the training data, causing lasting harm to the model’s performance. Model inversion attacks, on the other hand, try to reconstruct sensitive information from the model’s outputs. These risks highlight the need for strong defensive measures.

Detecting and Preventing Adversarial Attacks

Adversarial attacks involve feeding a model carefully crafted inputs to cause incorrect outputs. Security teams use testing frameworks to expose vulnerabilities before attackers can exploit them. Techniques such as adversarial training, which expose models to manipulated data during development, help improve resilience. More information on adversarial attacks can be found at MIT’s Computer Science and Artificial Intelligence Laboratory.

It is important to regularly test machine learning models with adversarial examples to understand their weaknesses. Some organizations use open-source tools to simulate attacks and evaluate how well models can withstand them. According to a report from the leading international think tank, adversarial attacks are a growing concern in both academic and commercial settings.

Defending Against Data Poisoning

Data poisoning is another serious threat in which attackers insert false data into training datasets. This can cause the model to learn incorrect patterns. Security teams monitor data sources, validate input data, and use anomaly-detection tools to detect suspicious changes. Regular audits of data pipelines are also essential in reducing the risk of poisoning attacks.

Data validation is crucial, especially for models that rely on data collected from external or untrusted sources. Automated anomaly detection tools can flag unexpected changes in the data or model behavior. The World-class academic center, Berkeley, has published research on advanced data poisoning detection methods.

Securing Model Access and Integrity

Access control is critical to prevent unauthorized manipulation of machine learning models. Security teams implement strict authentication and authorization protocols. They also use encryption to protect models during transmission and storage. The U.S. Department of Homeland Security provides best practices for securing AI systems.

Limiting who can access the model, both in development and in production, helps reduce the attack surface. Encryption ensures that sensitive model files cannot be easily stolen or tampered with if intercepted. Security teams may also use digital signatures to verify the integrity of models before deploying them.

Model Monitoring and Continuous Assessment

Once deployed, machine learning models require ongoing monitoring to detect unusual activity or performance drops. Security teams track inputs and outputs, looking for signs of manipulation. Automated tools can alert teams to sudden changes, allowing for rapid response and mitigation.

Continuous monitoring is essential because attackers may attempt to manipulate models over time, not just during initial deployment. Organizations often implement dashboards and alerting systems that notify staff of anomalies. In addition, regular performance reviews can help ensure the model continues to function as expected and has not been compromised.

Collaboration and Security Training

Effective defense requires collaboration between data scientists, engineers, and security professionals. Regular training helps teams stay informed about evolving threats and new defensive techniques. Security awareness is vital as attackers continue to develop new ways to target machine learning systems.

Cross-team communication ensures that everyone understands the risks and the steps needed to protect machine learning assets. Training sessions can include simulated attack scenarios, updates on the latest threats, and workshops on secure coding practices for machine learning applications. The importance of a security-focused culture cannot be overstated.

Emerging Trends and Future Challenges

As machine learning technology advances, so do the tactics used by attackers. New threats, such as model extraction and membership inference attacks, are becoming more common. These threats allow adversaries to steal intellectual property or deduce if certain data was used to train a model.

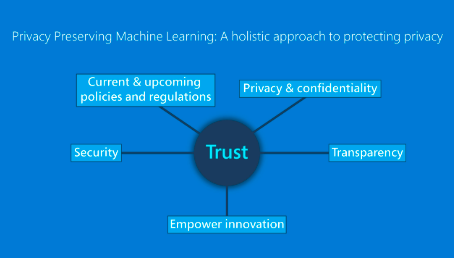

Security teams are exploring advanced defense mechanisms, such as federated learning and differential privacy, to help reduce risks. Federated learning allows models to be trained on decentralized data sources, minimizing exposure to poisoning attacks. Differential privacy techniques add noise to outputs, making it harder for attackers to extract sensitive information.

Staying ahead of emerging threats requires ongoing research, collaboration with academic institutions, and participation in industry forums. Security teams should also track updates from standards organizations and government agencies to keep up with best practices.

Conclusion

Protecting machine learning models from manipulation is a complex and ongoing task. Security teams must combine technical controls, regular monitoring, and cross-team collaboration to defend against adversarial attacks and data poisoning. As machine learning becomes more common, strong security practices are essential to maintain trust and reliability in these systems.

Looking forward, organizations should invest in continuous improvement of their security processes, adopt new defensive technologies, and foster a culture of vigilance. By doing so, they can help ensure that machine learning remains a safe and valuable tool for innovation.

FAQ

What is an adversarial attack in machine learning?

An adversarial attack involves creating inputs that intentionally cause a machine learning model to make incorrect predictions or classifications.

How do security teams detect data poisoning?

They use monitoring tools, validate data sources, and perform regular audits to identify unusual or suspicious changes in training data.

Why is access control important for machine learning models?

Access control prevents unauthorized users from modifying or stealing models, which helps protect their integrity and confidentiality.