High-Context AI Models and Enterprise Reasoning Workflows

Enterprises are no longer experimenting with AI in isolation; they are embedding models directly into core decision-making, compliance processes, and long-running workflows that depend on memory, reasoning, and traceability. In these environments, the ability of a model to hold and reason over large amounts of context becomes a decisive capability rather than a performance bonus. High-context AI models allow organizations to move beyond short prompt interactions toward systems that understand documents, conversations, policies, and evolving states over time. Among the models positioned for this role, Claude opus 4.6 is frequently evaluated for its capacity to support deep reasoning across complex enterprise inputs. Understanding why context length matters and how different models trade off reasoning depth, speed, and cost is now essential for technical and business leaders alike.

Why Context Length Changes AI Capabilities

Context length fundamentally alters what an AI system can do inside an enterprise environment. Short context models are effective for transactional tasks such as drafting a response, summarizing a paragraph, or answering a narrowly scoped question. Enterprise workflows rarely operate in such isolation. A procurement review may involve dozens of policy documents, prior decisions, supplier communications, and regulatory constraints that must all be considered together. A compliance investigation may span months of correspondence, logs, and contractual clauses that cannot be meaningfully compressed without losing nuance.

When a model can ingest and reason over large bodies of text at once, it behaves less like a reactive tool and more like a cognitive system that maintains continuity. This shift allows teams to reduce brittle handoffs between tools, minimize lossy summarization stages, and preserve source-level fidelity throughout the workflow. In practice, longer context enables more accurate reasoning because the model does not have to guess what was omitted. It can reference original language, detect contradictions, and track dependencies that would otherwise be invisible.

For enterprises, this capability directly affects risk. Decisions based on incomplete context are harder to justify, audit, or defend. High-context models make it possible to keep the entire decision trail within the model’s working memory, aligning AI outputs more closely with internal governance and external regulatory expectations.

See also: Choosing the Right Property Survey in Portsmouth: Homebuyer Reports vs Full Building Surveys

Enterprise Use Cases That Require Deep Reasoning

Deep reasoning becomes indispensable in enterprise scenarios where surface-level pattern matching is insufficient. Legal teams reviewing complex contracts need models that can reconcile clauses across hundreds of pages, recognize conditional obligations, and flag conflicts based on context rather than keywords alone. Financial institutions performing risk analysis must correlate market data, internal policies, and historical decisions to produce defensible assessments that stand up to scrutiny.

Another common case is internal knowledge management. Large organizations accumulate years of documentation that spans different systems, authors, and formats. High-context models allow employees to query this corpus as a whole, receiving answers that reflect institutional knowledge rather than isolated fragments. This reduces reliance on manual searches and lowers the cognitive burden on subject matter experts who would otherwise act as bottlenecks.

Operational planning also benefits from reasoning-heavy models. Scenario analysis, capacity forecasting, and incident postmortems all require the ability to synthesize timelines, dependencies, and causal relationships. In these cases, a model’s value is measured not by fluency but by its capacity to reason coherently across a dense and interconnected information space.

How Claude Opus 4.6 Handles Complex Inputs

Claude Opus 4.6 is often evaluated specifically for its ability to manage complex, high-volume inputs without collapsing into shallow generalities. In practical enterprise testing, this tends to show up in how the model maintains consistency across long documents and extended interactions. Rather than treating each prompt as an isolated event, it can preserve the internal logic of a conversation or document set, allowing follow-up questions to build naturally on prior context.

This behavior is particularly relevant for workflows that evolve over time. For example, during policy drafting or regulatory response preparation, teams may iteratively refine language while cross-checking against earlier drafts and external requirements. A high-context model can track these changes, understand what has already been agreed, and surface implications of new edits without reprocessing everything from scratch.

Another important characteristic is reasoning transparency. While no model provides perfect explainability, Claude Opus 4.6 tends to produce outputs that reference specific parts of the input context more explicitly. This makes it easier for reviewers to validate conclusions and increases trust in environments where human oversight is mandatory. The result is not automation without accountability, but augmentation that supports informed decision-making.

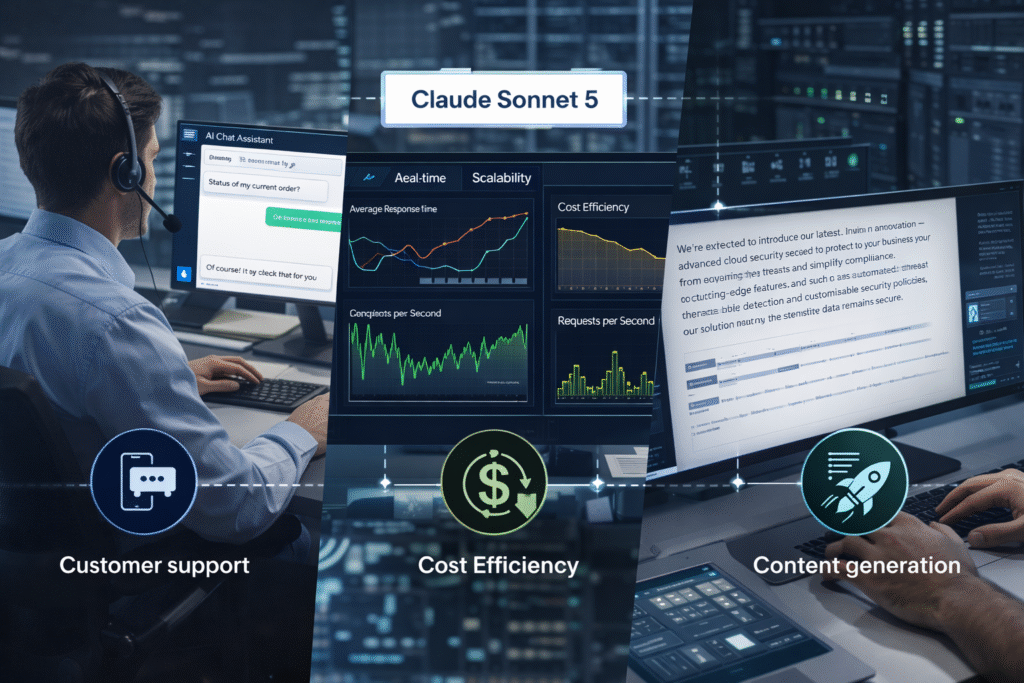

When Claude Sonnet 5 Is the Better Tradeoff

High context capability is not universally required, and enterprises often benefit from a portfolio approach to model selection. Claude sonnet 5 is commonly positioned as a pragmatic alternative when workloads prioritize speed, cost efficiency, or high throughput over maximum reasoning depth. Customer support automation, internal chat assistants, and routine content generation tasks often fall into this category.

In these scenarios, the marginal benefit of extremely long context is limited, while latency and operational cost become more visible constraints. Sonnet 5 offers a balance that allows teams to scale AI powered features broadly without over-provisioning capabilities that are rarely used. From an architectural perspective, this reduces complexity and simplifies capacity planning, especially in systems that must respond in near real time.

The key distinction is alignment with task requirements. Enterprises that treat all AI workloads as identical risk either overspending or underdelivering. By reserving high-context models for reasoning-heavy workflows and deploying lighter models for transactional use cases, organizations can optimize both performance and governance across their AI estate.

GPT 5.3 Codex in Enterprise Engineering Teams

Engineering teams within large organizations often have distinct needs compared to legal, finance, or operations groups. Code generation, refactoring, and technical analysis demand precision, adherence to syntax, and awareness of existing codebases. In this domain, gpt 5.3 codex is typically assessed for its strengths in software-oriented reasoning rather than general enterprise context handling.

Codex-style models are effective when the primary context consists of source code, configuration files, and technical documentation. They support workflows such as reviewing pull requests, generating test cases, and assisting with migration efforts. While context length still matters, the structure of the input is more constrained, and reasoning depth is focused on correctness rather than cross-domain synthesis.

For enterprises, this reinforces the idea that no single model should be expected to excel at every task. Engineering teams may rely on a different optimization profile than compliance or strategy functions, and aligning model capabilities with domain-specific requirements leads to more reliable outcomes and clearer accountability.

Selecting Models for Enterprise Reliability

Model selection at the enterprise level is ultimately a question of reliability rather than novelty. High-context models enable deeper reasoning, but they also introduce considerations around cost predictability, evaluation complexity, and operational oversight. Organizations that succeed with AI tend to formalize these tradeoffs through documented workflows, pilot programs, and ongoing monitoring rather than ad hoc experimentation.

A common pattern is to start by mapping workflows according to their context and reasoning demands. Tasks that involve long-lived states, regulatory exposure, or multi-document synthesis are natural candidates for high-context models like Claude Opus 4.6. Simpler interactions can be delegated to faster models, preserving resources without sacrificing quality. Engineering-specific tasks can be aligned with models optimized for code understanding.

This layered approach supports governance by making model usage intentional and auditable. It also improves resilience, as teams can adjust allocations when requirements change rather than rearchitecting entire systems. Over time, enterprises that treat context as a first-class design parameter are better positioned to integrate AI into their operations in a way that is sustainable, explainable, and aligned with real-world decision-making.